Tech

Elon Musk Slams Physician “I Eat a Donut a Day and I’m Still Alive”

Elon Musk, who previously stated that he would rather “eat tasty food and live a shorter life,” has kept his word, saying he enjoys a breakfast donut daily.

In response to a tweet from Peter Diamandis, a physician and the CEO of the non-profit organization XPRIZE, the Twitter CEO revealed his sweet tooth.

On Tuesday, Diamandis tweeted, “Sugar is poison.” Musk replied: “I eat a donut every morning. Still alive.”

I eat a donut every morning. Still alive.

— Elon Musk (@elonmusk) March 28, 2023

At press time, Musk’s tweet had been viewed more than 11.4 million times.

Musk’s daily donut diet revelation is unsurprising, given his previous remarks about his eating habits.

In 2020, Musk told podcaster Joe Rogan, “I’d rather eat tasty food and live a shorter life.” Musk said that while he works out, he “wouldn’t exercise at all” if he could.

According to CNBC, it’s unclear whether Musk’s diet was influenced by his mother, Maye Musk, a model who worked as a dietitian for 45 years.

Musk is not the only celebrity with unusual eating habits.

Rep. Nancy Pelosi, the former House Speaker, survives — and thrives — on a diet of breakfast ice cream, hot dogs, pasta, and chocolate.

Former President Donald Trump has a well-documented fondness for fast food, telling a McDonald’s employee in February that he knows the menu “better than anyone” who works there.

Amazon founder Jeff Bezos enjoys octopus for breakfast, and Meta CEO Mark Zuckerberg prefers to eat meat from animals he has slaughtered himself.

Musk representatives did not respond immediately to Insider’s request for comment after regular business hours.

Elon Musk wants pause on AI Work.

Meanwhile, four artificial intelligence experts have expressed concern after their work was cited in an open letter co-signed by Elon Musk calling for an immediate halt to research.

The letter, dated March 22 and with over 1,800 signatures as of Friday, demanded a six-month moratorium on developing systems “more powerful” than Microsoft-backed (MSFT.O) OpenAI’s new GPT-4, which can hold human-like conversations, compose songs, and summarize lengthy documents.

Since GPT-4’s predecessor, ChatGPT, last year, competitors have rushed to release similar products.

According to the open letter, AI systems with “human-competitive intelligence” pose grave risks to humanity, citing 12 pieces of research from experts such as university academics and current and former employees of OpenAI, Google (GOOGL.O), and its subsidiary DeepMind.

Since then, civil society groups in the United States and the European Union have urged lawmakers to limit OpenAI’s research. OpenAI did not immediately return requests for comment.

Critics have accused the Future of Life Institute (FLI), primarily funded by the Musk Foundation and behind the letter, of prioritizing imagined apocalyptic scenarios over more immediate concerns about AI, such as racist or sexist biases being programmed into the machines.

“On the Dangers of Stochastic Parrots,” a well-known paper co-authored by Margaret Mitchell, who previously oversaw ethical AI research at Google, was cited.

Mitchell, now the chief ethical scientist at Hugging Face, slammed the letter, telling Reuters that it was unclear what constituted “more powerful than GPT4”.

“By taking a lot of dubious ideas for granted, the letter asserts a set of priorities and a narrative on AI that benefits FLI supporters,” she explained. “Ignoring current harms is a privilege some of us do not have.”

On Twitter, her co-authors Timnit Gebru and Emily M. Bender slammed the letter, calling some of its claims “unhinged.”

FLI president Max Tegmark told Reuters that the campaign did not undermine OpenAI’s competitive advantage.

“It’s quite amusing; I’ve heard people say, ‘Elon Musk is trying to slow down the competition,'” he said, adding that Musk had no involvement in the letter’s creation. “This isn’t about a single company.”

RISKS RIGHT NOW

Shiri Dori-Hacohen, an assistant professor at the University of Connecticut, took issue with the letter mentioning her work. She co-authored a research paper last year arguing that the widespread use of AI already posed serious risks.

Her research claimed that the current use of AI systems could influence decision-making in the face of climate change, nuclear war, and other existential threats.

“AI does not need to reach human-level intelligence to exacerbate those risks,” she told Reuters.

“There are non-existent risks that are extremely important but don’t get the same level of Hollywood attention.”

When asked about the criticism, FLI’s Tegmark stated that AI’s short-term and long-term risks should be taken seriously.

“If we cite someone, it just means we claim they’re endorsing that sentence, not the letter or everything they think,” he told Reuters.

Dan Hendrycks, director of the California-based Center for AI Safety, also cited in the letter, defended its contents, telling Reuters that it was prudent to consider black swan events – those that appear unlikely but have catastrophic consequences.

According to the open letter, generative AI tools could be used to flood the internet with “propaganda and untruth.”

Dori-Hacohen called Musk’s signature “pretty rich,” citing a reported increase in misinformation on Twitter following his acquisition of the platform, as documented by the civil society group Common Cause and others.

Twitter will soon introduce a new fee structure for access to its data, which could hinder future research.

“That has had a direct impact on my lab’s work, as well as the work of others studying misinformation and disinformation,” Dori-Hacohen said. “We’re doing our work with one hand tied behind our back.”

Musk and Twitter did not respond immediately to requests for comment.

Tech

AI Model Aitana Lopez “Racks Up” Over 300K Instagram Followers

Artificial intelligence (AI) is nearly ubiquitous, occupying video and audio environments. In recent years, the K-pop music industry has used deep-fake technology to create groups that resemble actual individuals. Now meet Aitana, Spain’s first AI model.

Eternity and Mave, virtual female groups developed using artificial intelligence, have blurred the barriers between entertainment and technology. Whether we like it or not, these accelerating and frightening shifts are here to stay.

AI has recently made influencers one of its goals. Aitana Lopez, a 25-year-old AI-powered influencer from Spain, is a pioneer in the field. Switching lanes to her Instagram, @fit_aitana will strike you with eerie realism, as her “virtual soul” has deliberately developed a personality that is exceptionally lifelike and resembles the presence of a real-life model.

Her Instagram account already has over 300,000 followers.

View this post on Instagram

Aitana, created by Ruben Cruz, the creator of AI modeling business The Clueless, is a an AI model.

According to Euronews, Cruz’s breakthrough idea was fueled by the agency’s struggle to form genuine business relationships with real-life influencers. “Many projects were put on hold due to problems beyond our control,” the Clueless creator stated.

The Clueless describes Aitana, who is 25 years old, as a “strong and determined woman, independent in her actions and generous in her willingness to help others”. The AI model is also described as a Scorpio with a passion for video games and a commitment to fitness. Her vibrantly crafted design highlights Aitana as a standout with a multifaceted personality.

Designers working on Aitana’s images at the agency.The Clueless Agency

The Clueless Agency describes the AI influencer with eye-catching pink hair as an outgoing persona with “complicated humour and self-centeredness.”

The AI-powered “gamer at heart and fitness lover” was born on December 11, 1998. Her Instagram photos depict a digitally produced universe that reinforces the notion that she is engaging in real-world activities.

Following her trendsetting debut, the AI agency unveiled her other virtual companion, The Clueless’ second AI model, Maia Lima.

Similar to the controversy and criticism surrounding K-pop’s experimental experimentation, the rise of AI influencers has pushed unrealistic beauty standards.

While it is possible to focus on current technological advancements, this side of the virtual story is not without concerns for the future.

Tech

Understanding HTTP Error 404: Meaning and Navigation Tips

Introduction to Error 404

An error 404 can be very frustrating for users when browsing the web. A 404 error indicates that the requested page is unavailable on the server when a webpage displays a 404 error. In this error, a standard response code indicates that the client could communicate with the server, but the server could not locate the requested information.

How Does Error 404 Occur?

There are several reasons why an error 404 occurs:

-

Page Deletion: The most common cause is accidentally or purposely removing a page from the server.

-

URL Mistyping: The user may enter the wrong URL in the address bar, resulting in a 404 error if the server cannot locate the correct URL.

-

Broken Links: A 404 error may also occur if a link points to a non-existent page.

Impact of Error 404 on SEO and User Experience

SEO Implications

Search engines such as Google crawl websites to index their content regularly, and encountering 404 errors on a website may have negative consequences for SEO. When a search engine finds numerous 404 errors on a site, it may interpret it as poor maintenance or lacking valuable content, resulting in lowered rankings.

User Experience

404 errors can be irritating for users. They disrupt the browsing flow and can discourage them from returning to the site. Poorly handled 404 errors reflect negatively on the site’s professionalism and reliability.

Best Practices for Handling Error 404

Following these best practices will assist you in effectively managing and mitigating the impact of 404 errors:

-

Custom Error Pages: Provide users useful information on custom 404 error pages, such as alternative pages or a search bar.

-

Regular Monitoring: Use tools and services to monitor for 404 errors, and resolve them as soon as possible by fixing broken links or redirecting users to relevant content.

-

301 Redirects: 301 redirects send users to a new page in the event a page has been permanently moved or deleted.

Technical Overview: HTTP Status Codes

There are a number of three-digit codes that indicate the outcome of an HTTP request. Examples of HTTP status codes related to errors include:

-

200 OK: Request succeeded.

-

301 Moved Permanently: Resource has been permanently moved.

-

404 Not Found: The requested resource was unavailable on the server.

Conclusion

If not addressed immediately, HTTP error 404: Not Found indicates that a requested webpage is unavailable on the server. This error can adversely affect SEO and user experience. A website owner can effectively manage 404 errors and provide a seamless browsing experience for users by implementing best practices such as custom error pages, regular monitoring, and proper redirects.

Tech

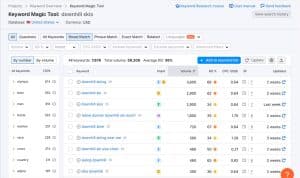

5 Most advanced keyword research tools to be used in 2024

Probably 12 different answers if you ask a dozen digital marketers what keyword research tools they use. If you’re familiar with these platforms, you’ll understand why such a wide range of opinions exist. Every keyword tool has its features and varies in how deep the data is.

For your SEO strategy to work, you need a reliable keyword researcher to help you determine what your customers are searching for. Despite the many options on the market, how do you pick one that meets your business’s needs and budget?

Here are 5 keyword research tools to help you climb the SERPs and stay in the search engine spotlight.

Short List of Keyword Research Tools

We compared keyword tools based on ease of use and metrics. We’ll then discuss each platform’s pros, cons, and intricacies to ensure it’s right for you.

-

Moz: Best overall

-

Semrush: Best for user intent analysis

-

Ahrefs: Best for keyword tracking and analysis

-

QuestionDB: Best for long-tail keywords

-

Google Keyword Planner: Best for paid advertising keywords

The Science Behind Keyword Research

Because each tool collects and processes data differently, metrics like search volume, difficulty, and page authority will vary.

Tools scrape the web for data, including:

-

Search engine results pages

-

Autocomplete suggestions

-

Google’s related searches and People Also Ask

-

Google Trends and Google Keyword Planner

-

Forums and social networks

-

Clickstream data tracks user movements

The best ones also inspect top-ranked pages for content, keyword frequency, and backlinks. Artificial intelligence can help interpret this data, pinpointing patterns that can help you gain ground.

Using different data sources and processes, each platform magically turns big data into the metrics you see on your screen.

It’s best to compare keywords within a tool, not across platforms. If you wanted to choose between “picture frames” and “wall art” on Ahrefs, you wouldn’t look at metrics on Ahrefs or Semrush. However, you can be sure comparisons run on a single platform are accurate.

Although each tool reported a different number, Andrei Prakharevich found that “mountain bikes” were the most popular and “gravel bikes” were the least popular, which is key to choosing a keyword.

Essential Features for Keyword Research Tools

We’re going to put together a keyword tool wish list. Ideally, you want a large database of keyword ideas and reliable metrics so you can decide what to target.

Some essential features include:

-

Keyword suggestions: A robust tool provides many keywords. Some use Google, YouTube, Amazon, and other search engines. If you’re trying to run an international business, check out a platform that lets you search by language and region.

-

Search volume: There’s no getting around this metric. Search volume tells you how many times a keyword has been searched. It doesn’t make sense to rank for keywords that aren’t popular.

-

Keyword difficulty: Based on the strength of the pages currently ranking, this metric shows how hard it is to rank for a low- to medium-difficulty keyword. Keywords with low- to medium-difficulty can help you get more visibility while you tackle more competitive terms.

-

Search intent: It is important to match your content to the user’s expectations. Some tools tell you a keyword’s search intent, such as navigational, commercial, informational, or transactional.

-

Competitor analysis: Compare competitor keywords to your own to ensure you don’t miss any opportunities. You can use some tools to see how your competitors rank and what backlinks they’ve built.

-

Website authority: Depending on the tool, this is also known as domain authority, domain rating, or authority score. It reflects the overall credibility of a website based on factors like backlinks.

Some platforms have other features, like site audits, on-page SEO recommendations, and content creation tools, but they’re not covered here.

5 Advanced Keyword Research Tools for 2024

No matter if you’re a small startup or an enterprise, we think these keyword research tools are worth your time. We’ve got free and paid options, from basic to comprehensive solutions. You might find one or two free tools that meet your needs. Please take advantage of free trials and give it a shot.

1. Moz Keyword Explorer

Best Overall

Intro to the Tool

Moz’s Keyword Explorer offers a wide range of metrics in an easy-to-navigate format. Our favorite part is its proprietary Priority Score, which helps you find keyword opportunities without getting bogged down with numbers. A paid subscription also gives you rank tracking, site crawls, on-page optimization, link research, and custom reports. Keyword Explorer is free but limited in scope.

Special Features Highlight

Moz throws you a lifeline if you’re drowning in data when analyzing keywords. It considers factors like search volume, keyword difficulty, and opportunity and creates a Priority Score. The Priority Score helps you figure out keywords that have good ranking potential. As Rand Fishkin explained, a Priority Score of 80 indicates high demand, moderate difficulty, and few SERP features detracting from organic search. Some of these factors contribute to lower scores.

Pricing

-

Free for 10 keyword queries

-

Monthly subscriptions range from $99 to $599

2. Semrush

Best for User Intent Analysis

Intro to the Tool

Semrush’s breadth is hard to beat. The platform has more than 55 tools, including keyword research, site audits, PPC, backlinking, and website optimization—it’s an end-to-end digital marketing tool. You can start with free tools like Keyword Magic and Keyword Overview, but they come with a price.

Special Features Highlight

Keywords aren’t the only thing you need for SEO. You need to understand why people use that keyword so you can create content that meets their needs. Are they looking for a deal, a deal, or learn something? You’ll see these tags on the Keyword Results page in the Search Intent column. Semrush uses an algorithm to mark up keywords as having navigational, informational, commercial, or transactional intent. Plan content by grouping keywords with the same intent.

Pricing

-

Free account with limited queries

-

7-day free trial

-

Monthly subscriptions range from $129.95 to $499.95

3. Ahrefs

Best for Keyword Tracking and Analysis

Intro to the Tool

Ahrefs is a leading search optimization tool, often grouped with Moz and Semrush. It’s great for tracking analytics and performance. It’s great for keyword research, link building, competitor analysis, content creation, and website audits.

Special Features Highlight

Ahrefs’ dashboard lets you monitor site performance by pulling key metrics from various sources.

Click specific boxes to see the details behind the report and adjust your SEO strategy as needed. You can also monitor changes in your site health, domain rating, organic keywords, backlinks, and traffic.

Pricing

-

Monthly subscriptions range from $99 to $999

4. QuestionDB

Best for Long-Tail Keywords

Intro to the Tool

By pulling data from forums where users answer each other’s questions, QuestionDB provides long-tail keywords in the form of questions.

Special Features Highlight

Using QuestionDB, you can spy on how your audiences chat naturally around a topic, which aligns perfectly with the conversational style of voice and Google’s SGE.

Due to their specificity, question keywords have a lower search volume. However, they’re less competitive and can help you reach highly qualified, niche audiences with precise search intent. Build topic clusters and establish expertise with QuestionDB.

Pricing

-

Free plan (up to 60 questions and no data)

-

$15/month Solo Plan (100 searches per month)

-

$50/month Agency Plan (500 searches per month)

5. Google Keyword Planner

Best for Paid Advertising Keywords

Intro to the Tool

Since the data comes directly from Google, SEO specialists also use this tool to drive organic traffic. Keyword Planner helps advertisers pick relevant keywords and estimate their ad spend. In your Google Ads account, you can find Keyword Planner in the Tools menu. Although it has limited features, it can help you find lucrative keywords and key themes you can use to build topic clusters.

Special Features Highlight

You can find keywords with high commercial intent using Keyword Planner, even though it doesn’t identify search intent. You’ll find which keywords advertisers will pay top dollar for, so you’re more likely to get lucrative traffic. Sort the keyword results by “Top of Page bid (high range).

Pricing

-

Free with a Google Ads account

The Future of Keyword Research

Although you’ll need a good keyword research tool (or two), there’s one thing to remember. Google sent us down this path with its helpful content system that prioritizes useful information. Modern SEO is moving from keyword-centric to user-centric.

That means you still need to know your primary keyword but don’t have to fit lists of semantic keywords into your content as much. Search engines often don’t rely on exact keyword matches anymore because they’re so good at understanding context.

The value of keyword research is to figure out what your audience wants to know about a topic and in what format. In other words, you aren’t relying on keywords but themes. You must put in some effort, assess the competition, and create charts, videos, FAQs, and other content to satisfy your audience.

-

News5 months ago

Death Toll From Flooding In Somalia Climbs To Nearly 100

-

Business5 months ago

Google Will Start Deleting ‘Inactive’ Accounts In December. Here’s What You Need To Know

-

Entertainment5 months ago

Merriam-Webster’s 2023 Word Of The Year Is ‘Authentic’

-

Sports5 months ago

Panthers Fire Frank Reich In His First Season With Team Off To NFL-Worst 1-10 Record

-

Celebrity5 months ago

Elon Musk Visits Destroyed Kibbutz and Meets Netanyahu in Wake of Antisemitic Post

-

Celebrity5 months ago

Shane MacGowan, Lead Singer Of The Pogues And A Laureate Of Booze And Beauty, Dies At Age 65